On July 1, San Francisco District Attorney George Gascón will launch a new artificial intelligence tool meant to eradicate potential racial bias in prosecutors’ charging decisions via a “race-blind charging system.”

The first-of-its-kind algorithmic tool, created by the Stanford Computational Policy Lab, will also be offered free to any other prosecutor’s offices that wish to take part.

“Lady justice is depicted wearing a blindfold to signify impartiality of the law, but it is blindingly clear that the criminal justice system remains biased when it comes to race,” said District Attorney George Gascón. “This technology will reduce the threat that implicit bias poses to the purity of decisions which have serious ramifications for the accused, and that will help make our system of justice more fair and just.”

In recent years, implicit–or unconscious–bias has become more widely acknowledged as an issue that tangibly impacts policing and all other critical stages of the U.S. criminal justice system.

Increasingly, police officials, prosecutors, and public defenders are implementing implicit bias training within their offices, with the hope of reducing racial inequity within the justice system.

San Francisco’s plan takes it a step farther, by bringing in tech that, with luck, will make it far more difficult for prosecutors’ to make decisions based on those subconscious biases.

“DA Gascón asked a simple question to kick off the project—if his attorneys didn’t know the race of involved individuals when deciding whether to charge a case, would that lead to more equitable decisions?” Standford Computational Policy Lab said. “We’re aiming to answer this question with a new, data-driven blind-charging strategy. First, we built a lightweight web platform to replace the DA’s existing paper-based case review process.”

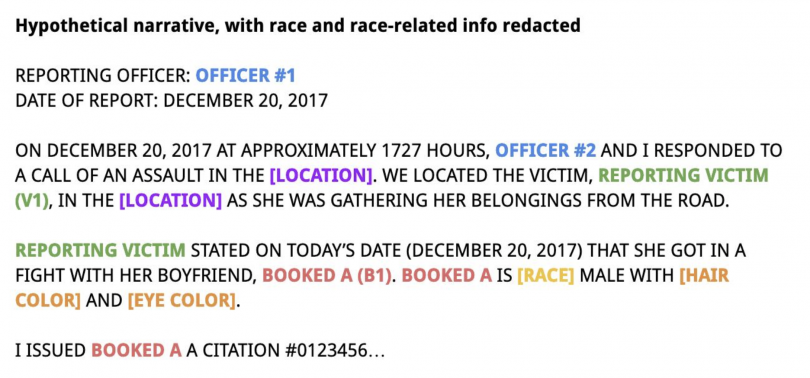

Stanford’s platform will allow for automatic identification of racial information, and automatic redaction of that information from crime reports and even “freely written crime narratives.” This includes individuals’ names, hair and eye colors, and home addresses if there’s a potential that prosecutors could use that information to guess at a person’s race.

With this redacted information, SF prosecutors will make a preliminary charging decision. After that decision is recorded, prosecutors will have access to the full, unaltered crime information, body camera footage, and other “non-race blind information.” In the event that a prosecutor drops charges or adds new charges, that prosecutor will have to document what additional evidence led to the changed charges. The DA’s office will collect and analyze the resulting data “to identify the volume and types of cases where charging decisions changed from phase 1 to phase 2 in order to refine the tool and to take steps to further remove the potential for implicit bias to enter our charging decisions.”

Image by Stanford Computational Policy Lab – a hypothetical example of how the blind-charging platform will redact identifying details from crime reports.

When the defendants make court appearances, will they wear bags over their heads? Maybe full burkas would be better so that all exposed skin is covered. And when the pleas are entered, the defendants will speak into a voice synthesizer so even the judge can’t determine gender. Can’t be assuming anything in your liberal, dystopian world.

That Gascón is quite the slippery character. Looks like he’s trying to find a way to avoid responsibility in carrying out the duties he was specifically elected to do. Don’t blame me the AI did it!

On the other hand , who cares what happens in the clown world of San Francisco? It has very little in common with cities in the real world.

To be honest, I haven’t read the article but the title “Artificial-Intelligence…” and it’s use in the criminal justice system peaked my interest. I believe law enforcement used this or some variant of the technology to predict where crime were likely to occur based on using a predictive algorithm. I believe, data collected from past arrests, calls for service, crimes committed, weather, time of year, probation/parolee/registrant addresses and other information was used as a basis for the program. In any case, the algorithm and program are only as good as the inputted data and subject to the strengths, weaknesses, beliefs and implicit biasis of the program designer.

Artificial Intelligence (or even a simpler system) could be used to replace the sentencing aspect of judges. All one would need is to create a data base of the laws, penalties and other parameters necessary for a program to calculate the sentence once a guilty decision is made. Judges could act as referees and ensure the trial, court room decorum and procedures are adhered to.

This would alleviate any judicial bias and sentencing disparity based on race, color, socio-economic background, gender or other characteristic people are inatelly prone to be biased against.

As it stands, judges have too much power in certain aspects of judicial proceedings and basically answer to no one and are accountable to no one. The mistakes they make in their rulings, interpretation and application of the law affect countless livesn and can lead to lengthy/costly appeals.

The only caveat of course, who makes the program? Who monitors the system? A human being?

Next thing they’ll do is make it legal for inmates in California to possess marijuana.

Oh wait………

EXCELLENT idea, “Star Trek!” If it wasn’t so depressing, it would be comical. Liberals CANNOT admit the real problem which is that blacks and, to a lesser degree, Latinos just commit a disproportionate amount of crime.

This latest (stupid) idea is like doctors desperately trying to treat a fat man by running tests on his liver, seeing if his GI tract is working right, checking his thyroid for a possible hormone issue, medicating him for his diabetes and replacing his knee joints but IGNORING the amount of FOOD he shoves down his gullet.

It MUST be one of the OTHER physical ailments that’s causing his morbid obesity!

Liberals just KNOW there’s a system-wide conspiracy involving teachers, cops, prosecutors, judges, jails guards and victims (often black and Latino) of crime to frame blacks and Latinos and remove them from society. When in fact, until we deal with WHY the fat man eats so much, he’s just gonna get fatter.