EDITOR’S NOTE: Risk assessment systems have been praised as a means of reducing the country’s astronomic prison population and corrections spending by calculating the likelihood that a person will fail to return to court or commit a new crime if released.

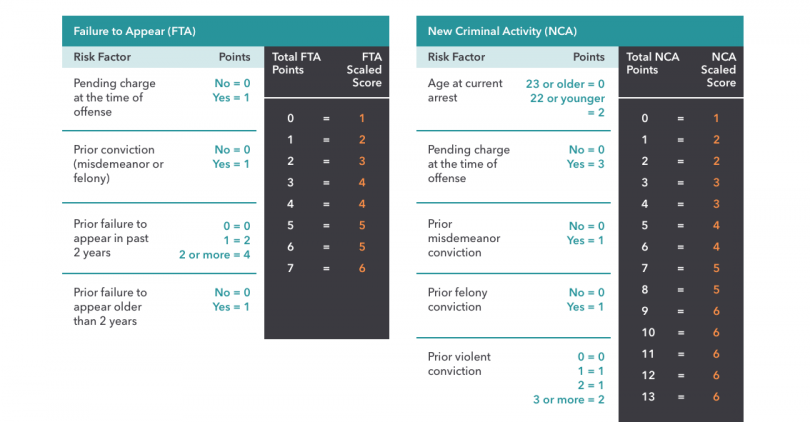

Pre-trial risk assessment tools produce a recommendation either for or against an individual’s release while they await trial. (These calculations are based on factors like an individual’s prior convictions, if they have failed to appear in court before, current charge(s), education level, employment, age, and more.)

A number of California counties use risk assessment in bail decisions, including Humboldt, Imperial, San Francisco, Santa Clara, Santa Cruz, and Ventura. Los Angeles County is working toward piloting risk assessment as part of its bail system reform efforts, as well.

Many law enforcement agencies also use algorithms to predict crime hotspots and to identify people who may be guilty of future crimes. Last week, the LAPD caught heat over how its crime prediction systems are used, after an audit from the Office of the Inspector General suggested the way the tools are implemented may be producing biased policing outcomes.

As the use of algorithmic risk-assessment and predictive policing programs becomes increasingly common across the nation, a growing number of criminal justice experts, advocates, and lawmakers are raising alarm bells about the fallibility of these tools that wield so much power in the criminal justice system.

Critics argue that the predictive analytics are prone to perpetuate the racial and economic discrimination they are meant to reduce. These problems are often masked by too little oversight or transparency.

In the op-ed below, Idaho Rep. Greg Chaney (R-Caldwell), who recently introduced a bill to require pretrial risk assessment tools to be fully transparent and to be verified as bias-free, urges fellow lawmakers to bring sunshine to the algorithms used in their own justice systems.

-Taylor Walker

Using Computers to Predict Risk in Criminal Cases: What Went Wrong?

By Idaho Rep. Greg Chaney (R-Caldwell)

Steven Spielberg’s 2002 film, Minority Report, was conceived on the seemingly far-flung premise that in some near-distant world of tomorrow, computers would be able to predict whether or not a person would commit a crime based on data. While the movie was somewhat of a disappointment critically and at the box office, it succeeded in doing what science-fiction films often do — forecast the future, while warning us about the possible ramifications when human wisdom does not keep up with advancements in science and technology.

The fact is that jurisdictions all around the country are implementing laws that determine what happens to individuals in the criminal justice system based not on what they have done, but what they are predicted to do in the future. The computers used to make these prognostications have already been implemented in every state and their use is growing rapidly.

One of the most disturbing aspects of these systems is the issue of the risk assessment algorithms that actually determine how the computers work. They are essentially black-box technologies, which means information is put into the system and it spits out results. Depending on what it concludes, a defendant is either released for free, released under supervision and monitoring, or kept in jail with no recourse.

Unfortunately, there is no way to understand how these algorithms draw their conclusions. In theory, these systems work by taking a variety of data about human beings, breaking the data into categories and then using arcane math to figure out what factors may correlate with future behavior. This is called regression analysis.

Proponents of these technologies claim everything is transparent because they have disclosed the factors that go into calculating the score. But this is akin to going to a restaurant and being told the names of dishes on the menu, but not the ingredients that go into making them, nor any explanation of what you’re actually eating.

Why would the builders and proprietors of the algorithms do this? The explanation may lie in the fact that many of the algorithms were created by for-profit corporations. For example, Arnold Ventures will not disclose how their system was built or allow anyone to see its underlying data. If it did, it would be a fairly simple matter for anyone to steal the algorithm and give it away for free. The same goes for COMPAS, another widely-used risk assessment tool.

Data derived from the FBI’s crime files has been used to build some of these tools. Incredibly, the proprietors behind them have had the audacity to claim that federal law and regulation has prevented the disclosure of “sensitive information.” They have also asserted trade secret privilege in order to prevent parties to criminal cases and even judges from ever seeing this crucial data, which logically, should be available to anyone for examination.

Risk assessment tools are being touted as not only effective, but also fairer than any system without algorithms. However, in practice, two things emerge: they don’t work as planned and serious concerns have arisen that bias is actually baked into its implementation. Because of this, rather than helping, they are making things worse for those in protected classes who are mandated to be free from discrimination based on their race, national origin and gender.

Recent university studies from Dartmouth College and George Mason University have shown that algorithms are poor predictors of the future, with none coming to a correct conclusion more than 70 percent of the time. In the Dartmouth study, random individuals off the internet were solicited to make guesses against an algorithm. These random folks were able to predict more accurately than the algorithm. Nicholas McNamara, a Wisconsin circuit judge, complained that some people in his state incorrectly regarded its risk assessment tool as somehow allowing them to see into the future, “like a Magic 8 Ball that works.”

As for bias, algorithms had previously not been tested for it in the 20-plus-years that risk assessment tools have been in existence. But with the dramatic increase in the use of these tools in recent years, has come heightened scrutiny of this possible component. It shouldn’t come as a surprise that dozens of civil rights groups from coast-to-coast, including the ACLU and NAACP, have now come to their own conclusions that these systems are, in fact, biased.

Predictably, Arnold Ventures tested their system for racial bias using their own vendor and subsequently proclaimed that their algorithm was bias-free. COMPAS, on the other hand, was tested by independent researchers at ProPublica, who came to the conclusion that their risk assessment tool was biased against African-American defendants.

In light of these findings, I recently introduced legislation in my state of Idaho to bring much-needed light and transparency to this rapidly evolving process. I am also calling for a national wake up call to confront the reality behind the use of algorithms, following the lead of scholars at New York University, which created the socially-conscious AI Now Institute. Ultimately nothing less than draconian tools of injustice, the algorithms don’t work as advertised and cause far more harm to society than good.

It is time we apply some much-needed wisdom to break free from the shackles of technology that we have imposed upon ourselves. I call upon my fellow legislators around the country to not allow what was once the farfetched premise of a science-fiction film to become a grim reality.

Greg Chaney is serving his second term in the Idaho House of Representatives representing Legislative District 10—which includes most of Caldwell. He has championed reforms to criminal justice, elections law, and taxation. In the House of Representatives, his committees include: Revenue & Taxation; Judiciary, Rules, & Administration; and Energy, Environment, & Technology.

Image: Arnold Foundation Public Safety Assessment – Risk Factor Weighting

Get this and other essential justice stories delivered directly to your inbox by subscribing to The California Justice Report, WitnessLA’s weekly roundup of news and views from California and beyond. Read past editions – here.